When AI Joins the Migration Team

by Marcin Prystupa | 05/11/2025In this article, I want to share our experience of migrating a huge legacy AngularJS codebase to React in 2025. But here’s the twist - we aren't doing it the traditional way. We leaned into AI tools like ChatGPT and Cursor to help us along the way. Some things worked great; others, not so much. This is our story, lessons learned, and where we think AI fits (and doesn’t) in modern dev workflows.

Setting the Scene: When Legacy Meets Opportunity

Let’s set the stage. Picture a large, messy AngularJS app that had been running for years, quietly powering operations for multiple accounting companies. It was one of the first web apps we ever built and, over time, it grew... and grew... and by 2025, it was showing its age.

AngularJS was long out of support: the tooling had moved on, and getting new devs up to speed was painful; the codebase was full of one-off solutions, tangled CSS, tightly coupled logic, and brittle templates. Every fix risked breaking something else - you get the picture.

And yet, this app was still mission-critical. We couldn’t just switch it off, and a full rewrite wasn’t realistic either. So we were stuck between "leave it alone and suffer" or "find a smarter way to migrate."

That’s when the idea popped up: could AI help?

By this point, tools like ChatGPT, Cursor, and Claude had proven surprisingly capable in side projects. So we figured - why not try them here? Maybe we could offload the grunt work - the repetitive, boilerplate-heavy parts of the migration - and focus our human effort where it actually mattered.

We weren’t sure if it would work, but we were also curious enough to try.

The Plan: AI as an Extra Pair of Hands

This wasn’t a desperate attempt to save time halfway through the migration - AI assistance was part of the plan from day one.

We went in knowing that migrating a legacy AngularJS project wouldn’t be fast or easy. But we also knew a big chunk of the work would be repetitive - things like converting templates to JSX, adding boilerplate props, lifting services into hooks, and rebuilding components in Storybook. Tedious, mechanical tasks.

So we asked: what if we let AI do the heavy lifting?

The idea was simple - use AI to draft the boring bits, then step in to polish, debug, and integrate. That division of labour made sense to us: AI wouldn’t decide on architecture or manage complex state, but it could definitely help sketch out components, generate structure, and save us from starting from scratch each time.

We ended up using three main tools:

- Cursor: A context-aware coding assistant that was incredibly helpful for in-place edits and code suggestions directly in the editor.

- ChatGPT: Versatile and predictable. We used it to run structured prompts and iterated on those prompts to get more consistent results.

- Claude: Surprisingly effective with large files and long context windows. It was useful for summarising big Angular components before conversion.

The key was treating these tools like junior teammates - fast, helpful, and in need of oversight. We didn’t expect perfection, just a boost and that mindset shaped everything that followed.

Building the AI Workflow

Once we had a plan, the next step was turning it into a repeatable workflow - something we could scale across the hundreds of components we knew were waiting for us.

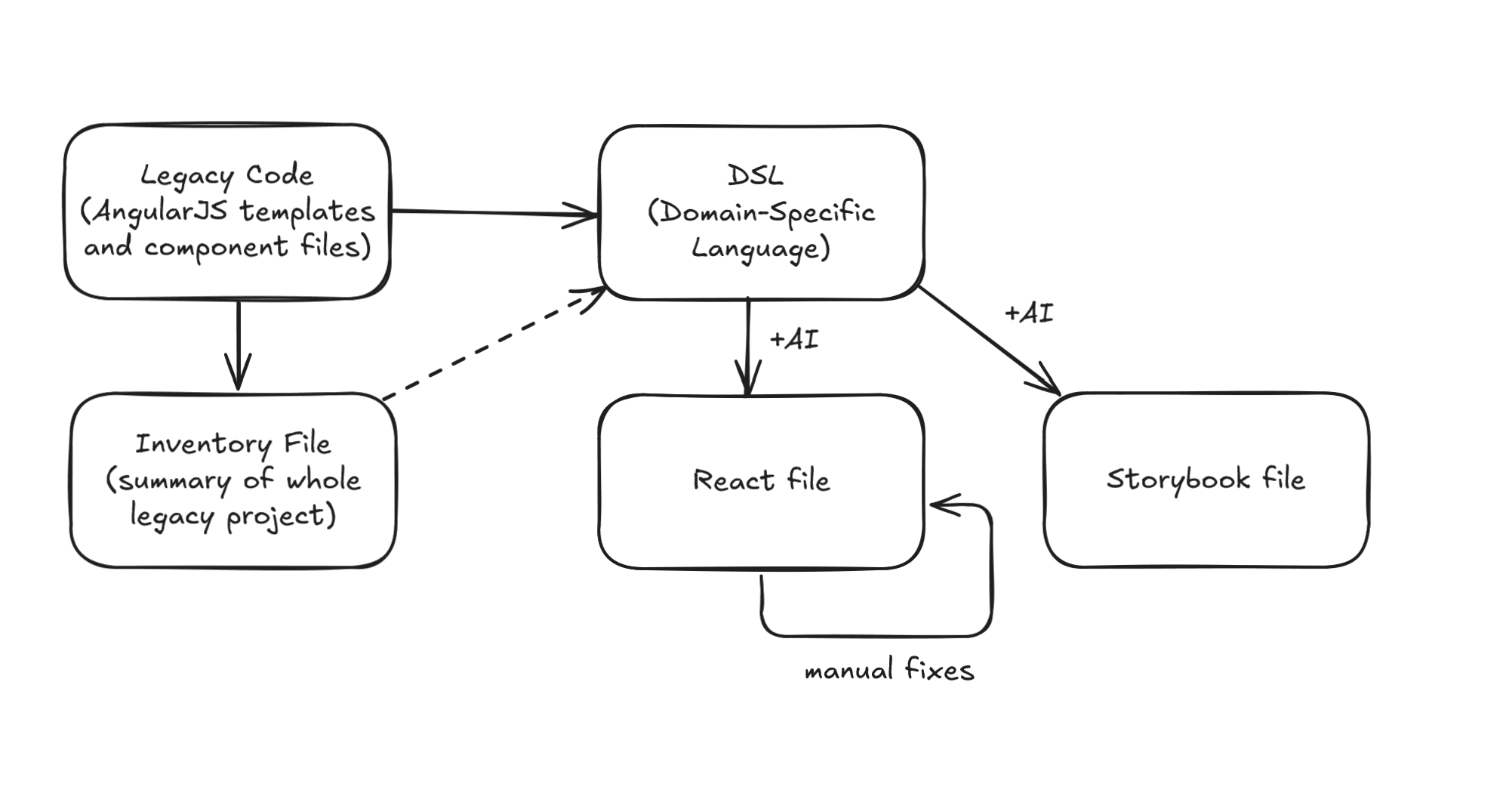

We started by generating a full inventory of the AngularJS project. This wasn’t glamorous work, but it gave us a birds-eye view of what we were actually dealing with: component types, routing structures, services, and even which parts were likely to explode on touch. Having that map was essential.

Next came the real unlock: designing a Domain-Specific Language (DSL) to describe components in a compact format. Why? Because token limits were killing us. Trying to feed full Angular components into ChatGPT or Claude would often hit context limits or result in half-finished answers. By reducing each component to its structural essence - inputs, outputs, HTML structure, bindings - we could feed much more context into the models while staying under the cap.

With the DSL in place, we crafted a master prompt - a carefully designed instruction set that told the AI how to generate a React version of the component, complete with a matching Storybook story. This prompt did a lot of heavy lifting: it handled layout, props, and even started translating Angular patterns into React ones. Of course, results varied - but it gave us a solid starting point.

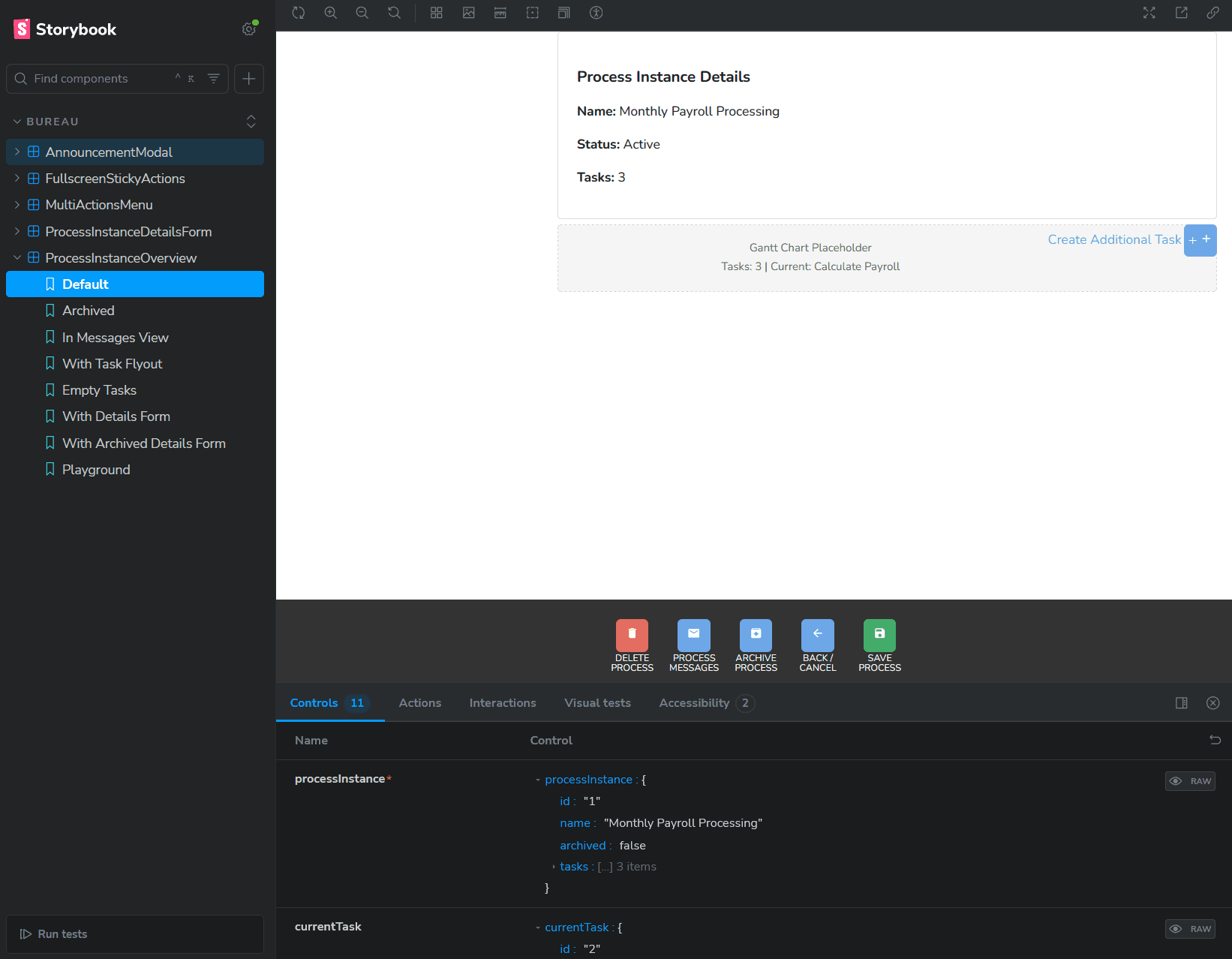

The final stage was manual validation, and for that, Storybook became our best friend. Every AI-generated component was wired into Storybook first, so we could quickly see what broke, what looked off, and what needed human intervention. It wasn’t perfect, but it gave us immediate visual feedback and became the central loop of our AI-assisted workflow: DSL in → AI out → Storybook review → manual fixes.

It wasn’t fast at first of course. We tweaked prompts, retried generations, and rewrote components that fell apart. But slowly, the pieces clicked into place. We're building a system - semi-automated, human-guided, and weirdly satisfying when it works!

What Worked Surprisingly Well

While plenty of things needed hands-on attention, some parts of the workflow clicked into place better than we expected.

One of the biggest wins was how well AI handled repetitive conversions. Once we had a solid master prompt in place, generating basic React component scaffolds became fast and surprisingly consistent. Repetitive patterns like form inputs, layout wrappers, and modal structures were handled well enough to save us hours of manual boilerplate writing.

Storybook also turned out to be a stealth MVP. We originally included it just to help validate output visually, but over time, it became something more. For many of these components, this was the first time they had ever been visualised or documented in isolation. Storybook gave us an easy way to test, validate, and demo work - not just for us, but for QA and even Product folks.

Another unexpected win was how much we were able to improve the master prompt over time. Small adjustments - like including layout examples or reinforcing naming conventions - had a noticeable impact on output quality. Each iteration got us closer to output that felt like something a teammate would write.

Even onboarding got a boost. New developers could look at partially migrated components and understand the logic much faster than if they had to wrestle with legacy AngularJS structure.

And then there were the accidental wins. The migration process forced us to look at every single component - which meant we stumbled across loads of dead code, duplicate logic, and forgotten features.

Where It All (Slightly) Fell Apart

Of course, it wasn’t all smooth sailing. For every win, there was a moment where things completely fell apart - and some of those moments were more valuable than successes.

First up: token limits. This was the main reason we introduced the DSL in the first place. Large components couldn’t fit into the context window as-is, and even if they did, they'd burn through tokens way too fast. The DSL allowed us to reduce each component to its structural essentials - inputs, outputs, and bindings - while keeping the output usable. It didn’t solve everything, but it allowed more consistent processing and kept the AI from drowning in too much noise.

Then there were hallucinations. AI would invent props, services, or entire functions that looked totally plausible but didn’t exist in the source code. You’d get excited seeing the output... only to realise that none of it was real. Worse, it sometimes looked just close enough to be believable, which made it easy to miss the mistakes.

Styling of the legacy code also turned out to be a major trap. A lot of visual layout was driven by fragile selectors, deep nesting, or layout wrappers with side effects. Migrating a component often meant dragging legacy structure along just to preserve how it looked - or rewriting styles from scratch. Either way, not ideal.

Finally, the biggest limitation: AI had no idea about the app beyond the files we fed it. No domain awareness, no architectural context, no sense of “how this fits into the bigger picture.” That meant we still needed to be in the driver’s seat at all times.

So yes, AI helped. But without strong human oversight, it could just as easily lead you off a cliff.

Lessons Learned

One of the biggest early wins has been seeing how well AI performs when given structure. The DSL and master prompt gave the AI clear rails to follow, which dramatically improved consistency and reduced errors. The more structured we made the input, the better the output became.

We also learned that prompt design matters, and it matters a lot. Small tweaks to wording - even just changing the order of examples - had a noticeable impact on quality. It felt less like engineering and more like UX design: shaping the environment so the AI could succeed.

We confirmed what we suspected going in: human oversight is absolutely necessary. AI can draft, assist, and scaffold, but it can’t reason about domain logic or architecture. Someone still needs to know what the component is for and where it fits into the broader system.

There were some pleasant side effects too, of course! Our Storybook usage matured quickly, turning into a proper source of truth and prompted us to learn more about it and expand its usage across the projects - personally, that's my favourite out of this experience!

Lastly, it became clear that AI is not a magic wand - but it is a productivity tool when used deliberately. You need a strategy, you need structure, and you need someone behind the wheel.

Reflections and Closing Thoughts

Reflecting on where we are so far, the question we asked ourselves at the start of this endeavour was: can AI speed up and assist during big migration projects? The answer is: yes - but only if you respect the limits.

AI gave us momentum: it helped us avoid burnout on repetitive tasks, introduced better structure into our component library, and nudged us toward cleaner practices like proper Storybook usage. It didn’t write perfect code. It didn’t understand our app. But it’s been enough to keep us moving forward - and that’s a win in my humble opinion.

The real power came from combining AI’s speed with human judgement. With the right setup, it felt less like automation and more like collaboration - as if we’d added a slightly quirky but hard-working junior dev to the team, one that doesn’t sleep, but occasionally makes up functions that don’t exist (but hey, we've all been there as juniors, don't we?).

So, would we do it again? Absolutely - but with even more structure, more guardrails, and a better understanding of what AI is good at (and where it still trips up). We’re still learning all about the power and limits of AI in our day-to-day work and this has definitely been a big step in the right direction.

Learn more about practical AI with Naitive

Ready to explore how AI could accelerate your own projects? See how we help teams turn AI potential into practical results – responsibly and effectively.

👉 Discover more at naitive.co.uk